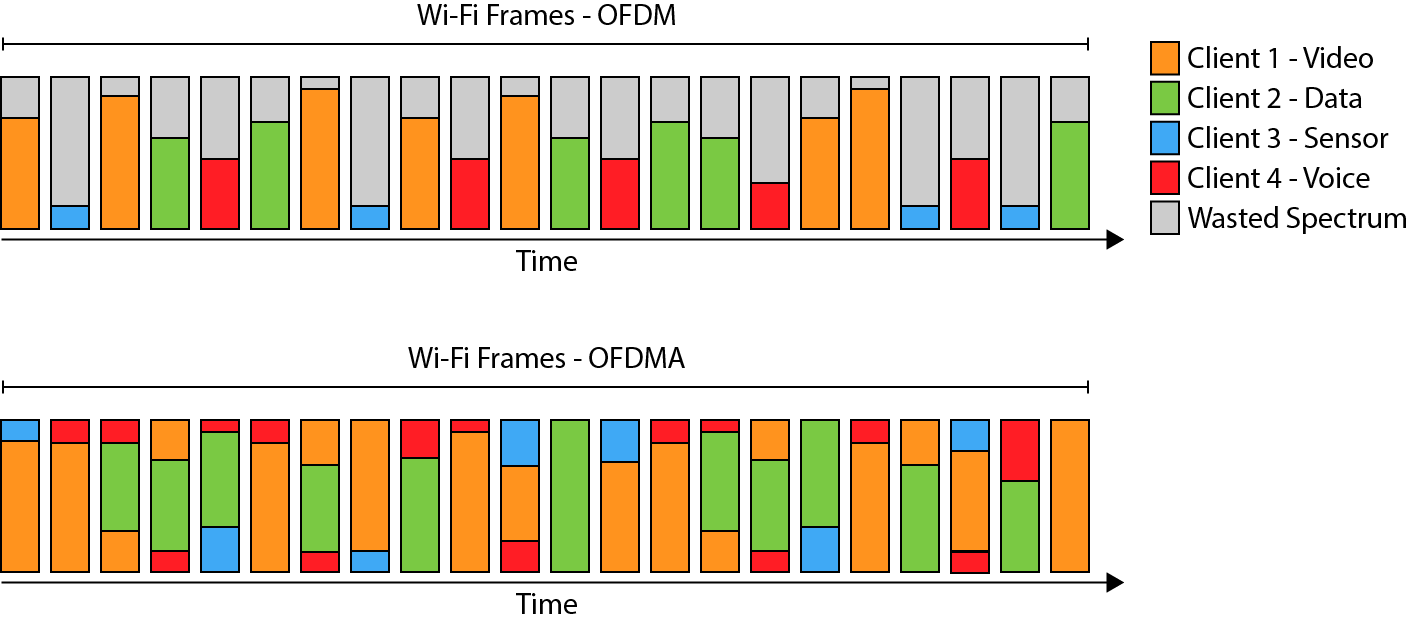

So, to step out of the analogy for a moment: what does this practically look like in a WiFi transmission? The genius of OFDMA lies in giving the infrastructure the ability to plot and plan these frames based on the needs of the clients with the information available to the infrastructure. An entire 20 MHz channel can be divided into 234 multi-user subchannels in WiFi 6, and those subchannels can be doled out to the clients as needed, as well as decoded separately to separate out whose parcels are whose. To briefly return to our analogy, that means 256 equal size parcels per truck, arranged in the way that makes the most sense for the overall network based on need.

This analogy isn’t perfect (for one, you should never load out just the left-hand side of a truck), but it expresses the key benefit of OFDMA: better management and utilization of the empty space inside the average WiFi frame. The “MA” in OFDMA (multiple access) is the really transformative development: The previous method, OFDM (which stands for Orthogonal Frequency‑Division Multiplexing) was created to allow multiple simultaneous communications (creating the frame / truck structure in the first place) and prevent those signals from interfering with each other. OFDMA is an advancement of complexity in the same direction.

Impact on Throughput: How Much More Can We Send?

As you can probably imagine when you envision that fully-loaded truck, more data is getting to the delivery point, and faster. But how much more, and how much faster? That depends on what kind of shipper you are, and what your demands are. The big shippers that were already packing out most of the truck correspond with high-volume data devices, those streaming high quality video or other payload-intensive devices. And, unsurprisingly, they’re unlikely to see much of a change in their delivery schedules. It’s still a lot of data, and it’s still got to wait in line.

The big advantages are for the lightweight shippers, those with a box or two. These correspond to sensor type devices, remote control devices, the types of devices whose communication might be infrequent and ultimately data-light when they do communicate. By many estimates, the average improvement is as much as a 3x reduction in latency. All of this is managed by the infrastructure, what it knows about the client, what kind of device and traffic is characteristic of its connection, and what is available.

It's also important to note that the improvement in latency is not universal. It’s entirely dependent on the kinds of devices on the network and what kinds of efficiencies can be cobbled out of their traffic. It’s impossible to give a solid percentage decrease in latency, which is why 3x is an estimate, but not a rule. The important point is that the overall network becomes closer to 100% utilization of bandwidth, cutting out empty space as much as possible and providing for the opportunity of important smaller data packets to be delivered much faster than previously. Again, everyone wins.

…But It’s Not Just About Data

A last thought here on latency, but an important one to note, is to turn back to where we started in this blog post. Latency is important for time-sensitive data, it’s important for wireless applications, and it’s obviously more important for devices and traffic that are health or safety focused. But lowering latency is about more than that. It can have a huge impact particularly on battery-operated devices, the kinds of devices that are on the go and can’t afford to spend more battery than necessary to carry out their tasks.

With wireless devices, power is wasted when poor latency or transmission retries compromise the signal. That’s part of why OFDM was designed as it was, with separate frames and buffer time in between to protect each from the next. Limited interference means a cleaner link, less retries, and a better application.

OFDMA takes this the next logical step forward, and in so doing further reduces the time to wait for devices to send their data, receive acknowledgement, and go back to a lower-power state. Bigger devices with bigger batteries, or devices powered by AC power, have less concern in this regard. But small, battery-conscious devices can last for much, much longer on a single charge when their battery isn’t being monopolized by the wireless link.

WiFi 6 and 6E represent a major improvement for how battery-operated devices manage their available power, through minimizing the higher power on time these devices must use to transmit data, which makes for a better user experience overall. And it couldn’t come at a better time, as the number of WiFi devices continues to explode and the infrastructure’s management of all its clients becomes more and more complex.

Examples

There are many WiFi applications for which low latency is crucial. A delay in operation can cause problems for many, including desynchronization between connected devices that can jeopardize the expected behavior.

- Machine Control / Robotics: In automated machine control, multiple machines or robotics systems often operate in collaboration with each other, synchronizing their movements to each other within tight tolerances. For example, one machine may pick up an assembly component, apply an adhesive, and then pass it off to the next station where another machine picks and installs the part. It’s critical that these parts work together correctly, as well as stop simultaneously in the event of an emergency stop. Low latency in WiFi 6 gives us better results in terms of coordination and safety for machine control.

- Lighting Control: In indoor venues, lighting can be connected to Wi-Fi to achieve centralized and synchronized control over lights across a large area. In the case of something like theater and stage lighting, that control needs to be very low latency in order to look and feel correct: When bringing down the house lights, if some fall out of sync, the visual effect is uncoordinated and breaks the experience. The same goes for decorative lighting effects that are part of the show itself which are highly timing-sensitive. By giving these light controls a route to ultra-low-latency, the engineering booth creates a better, more cohesive experience.

Conclusion

OFDMA is a major step forward that brings WiFi in line with the other technologies, notably LTE, that use smarter spectrum management to service a multitude of devices smartly and effectively. That’s our next topic of discussion in our upcoming part 4 of this series: Device Density. We’ll return to how OFDMA supports this much higher device count, as well as other technologies involved such as MU-MIMO and BSS coloring, which help support environments with dozens of tightly collocated devices. Consider a hospital room, which can easily contain dozens of devices. Then consider the wing of a hospital, with two dozen rooms. Then consider that there are floors full of these wings.

This is why the time for WiFi 6 and 6E is now. The challenges are great – but these new standards are well-poised to support the fully wireless world of the near future.

Subscribe to our blog series to get updates on our next post and many more as we continue our 2022 series on WiFi 6 and 6E.

Laird Connectivity is now Ezurio

Laird Connectivity is now Ezurio